How can you land 5 kilometers above the Moon?

The near success and crash of the HAKUTO-R Mission 1

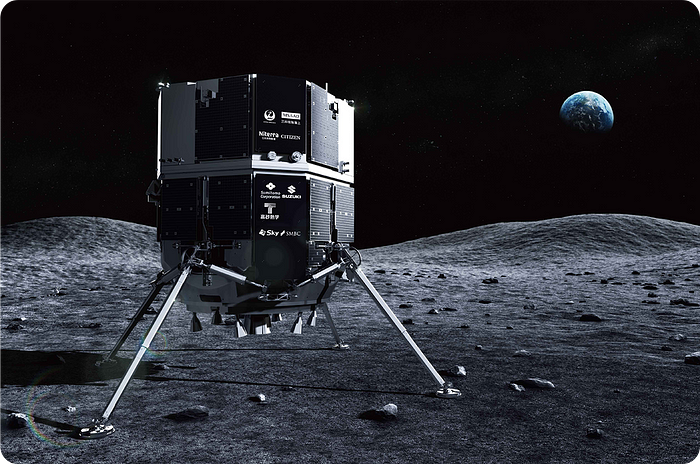

In April of 2023 the Japanese space exploration company ispace attempted to land the HAKUTO-R Mission 1 on the Moon. The first stages of the mission proceeded flawlessly; launching in December of 2022, achieving lunar orbit in March of this year, and initiating the landing sequence on April 26, 2023, at 00:40 Japan Standard Time.

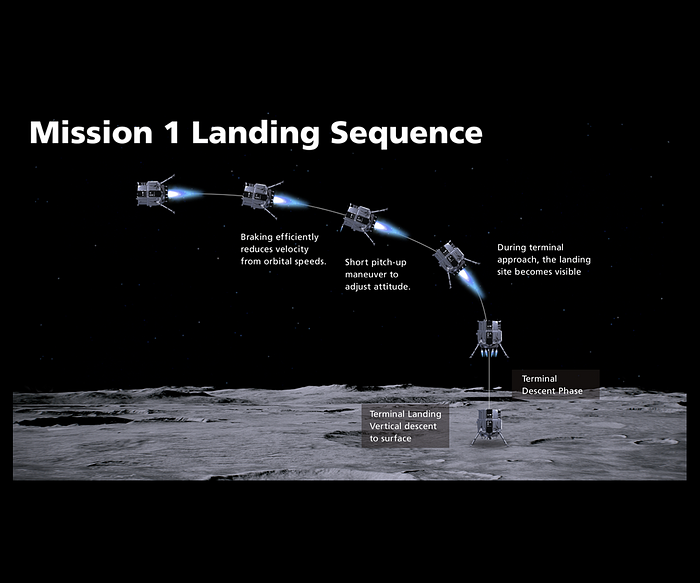

Even the descent of the lander started out successfully. The spacecraft’s route was followed closely by the flight controllers in Japan and broadcast around the world. Telemetry showed the lander slowing down on schedule and following the planned path towards the lunar surface.

The onboard computer consulted the onboard instruments and constantly adjusted the flight of HAKUTO-R M-1, bringing it it to a nearly perfect stop, just as planned, slightly above the lunar surface. Everything was ready for a the first lunar landing by a private/commercial company.

Everything, that is, except for the fact that HAKUTO-R M-1 was not a few meters above the lunar surface but five kilometers above it when it ran out of fuel and plummeted to its destruction far below.

Controllers watched helplessly as the autonomous spacecraft hovered above the Moon, convinced that it was moments from rest, and then watched the spacecraft pick up speed as it fell, faster and faster, until it impacted the ground at around 2am Japan Time. Within a few days, photographs from the Lunar Reconnaissance Orbiter, which has been circling the Moon since 2009, showed the debris on the lunar surface.

ispace have recently published the findings from their “post-incident review” and many of these are relevant to those of us on the ground who have to deal with failures in the systems we support.

I will summarize a few illuminating highlights.

First, while the mission obviously was an overall failure, it’s important to highlight the successes of the mission — of the 10 objectives or milestones, 8 were achieved. In the same way, our post-incident reviews should balance when went right in addition to what went wrong — what have we learnt that was correct, that we should do again next time.

Next, failure occurred because HAKUTO-R M-1 thought it was at the surface of the Moon when it was actually high above it. What caused this discrepancy? There were a number of primary contributing factors —both technical and process oriented.

The spacecraft had an internal radar or altimeter sensor which told it what its altitude ( height above the ground) was. Because as Site Reliability Engineers (SREs), DevOps engineers, sysadmins, and spacecraft engineers know very well that sensors can be faulty, there were a number of software conditions which “watched the watcher” and told the spacecraft whether it could trust the measurements coming out of the sensor. One of these conditions was “our altitude changes in proportion to our speed & acceleration”. In other words, if we’re descending at 10 meters a second and our height suddenly changes by 1km in one second — then something is wrong with the sensor and we’d better start ignoring it!

Similarly, we, as SREs, use measurements known as Golden Signals to monitor the performance of our service and we generally expect there to be relationships between measurements.

Let’s imagine we’re supporting an online store selling model spacecraft. When monitoring the store, we might measure latency (how fast the store responds to requests) and traffic (how many people are using the store right now).

We’d then expect latency to change in proportion to traffic and if latency is degraded (the service running the store was slow) we say “Naturally, the more people are using our service the slower it runs. We’d better add more resources to get back to the right speed”.

But when traffic is stable (no additional users) and latency is degrading then we say “Uh, oh… something’s not working right. We’d better investigate and fix it”.

Back on the Moon however, the spacecraft overflew a 3km high cliff — which meant that the sensor was absolutely correct when it reported a sudden height change! But since this anomalous (and correct!) report broke the “altitude changes in proportion to our speed & acceleration” rule, the spacecraft computer steadfastly ignored further reports from the altimeter and used other, less accurate, methods to estimate how high it was above the surface.

Ignoring the signal from the altimeter, the spacecraft thought that it was at 0 meters above the surface and hovered there… slowly running out of fuel and thinking that it would just drop down a few meters. But the truth was with the ignored sensor and once there was no fuel to keep it flying, it descended to the true “altitude 0”, about 5,000 meters below.

So there was an unexpected and unknown precondition to the truth of the technical rule of “altitude changes in proportion to speed & acceleration” — and that is that the surface height doesn’t change suddenly.

The next question therefore becomes — why didn’t the ispace engineers plan the flight path of the spacecraft and see that it would overfly the cliff? If a practice run showed that the altitude suddenly changed then they could have modified the condition that told the computer to ignore the radar and that would have saved the mission!

And the answer is that they had practiced the mission and during the (many, many, many) practice runs the altitude had never changed as abruptly as it did during the real landing mission. And this is where the next contributing factor to the failure comes in — the landing site was changed some time after the tests and the tests were not re-done with the updated landing site location!

This meant, of course, that the underlying technical assumption (altitude changes in proportion to speed & acceleration) was incorrect because they also changed due to the sudden terrain change.

Returning to our terrestrial example of latency and traffic in our spacecraft model store, it’s as if latency is also connected to how many kinds of models we stock — and then we suddenly doubled our inventory. That would mean that our system was running slowly without any reasonable explanation, since we didn’t think to measure the variety of models in stock!

After their investigation and analysis, ispace said that they have learned from the errors during the mission and are confident in the success of the next missions because:

We will ensure that the valuable knowledge gained from Mission 1 will lead us to the next stage of evolution.

— Takeshi Hakamada, Founder and CEO of ispace

In summary, ispace has learnt many lessons from the partial success of HAKUTO-R M-1, many of which are relevant to us here on Earth. A few highlights:

- Don’t change mission parameters without redoing the relevant testing — this would have exposed that the mission was overflying a cliff and changed the technical rule which caused the spacecraft to ignore the radar.

- Allow for recovery from failures — this would have enabled the spacecraft to consult the radar again and seen that it was reporting consistent data.

- As a rule, validating data and telemetry is a good thing, but make sure you’re not throwing out good data in the pursuit of perfect data.

I’m looking forward to their next missions and hope that all of its milestones succeed. In Japanese mythology, Hakuto is the white rabbit living on the Moon. He’s waiting for his robotic companion to join him.

If you liked this article please clap here and share everywhere else!

And here’s a list of more of my articles:

For future lessons and articles, follow me here as Robert Barron or as @flyingbarron on Twitter and Linkedin.